Kubernetes Hacks and Tricks – #7 Pod and Container Resource and QoS classes

Do you know how Kubernetes decides which Pods should be evicted if the node resource is insufficient or running out? In this article, we will discuss this and introduce Kubernetes Quality of Service (QoS) classes related to this topic.

Follow our social media:

https://www.linkedin.com/in/ssbostan

https://www.linkedin.com/company/kubedemy

https://www.youtube.com/@kubedemy

What is Node Pressure?

Node pressure is a term that explains the worker node’s resources are running out. Pressure means something got limited on the worker node, which can be Memory, Storage, PID space, etc. When the worker node gets pressured, Kubelet tries to free up its resources, which means some Pods should be evicted on the node, meaning the application should go down and run somewhere else. Finally, it means you will experience some downtime in your system, and it’s a nightmare for everyone.

Kubernetes Quality of Service(QoS) classes:

Kubernetes comes with three default QoS classes which help Kubernetes determine which pods should be evicted first on rainy days. The possible QoS classes are Guaranteed, Burstable and BestEffort. When a Node runs out of resources, Kubernetes will first evict BestEffort Pods running on the node, followed by Burstable and finally Guaranteed Pods to make room for Pods need more resources.

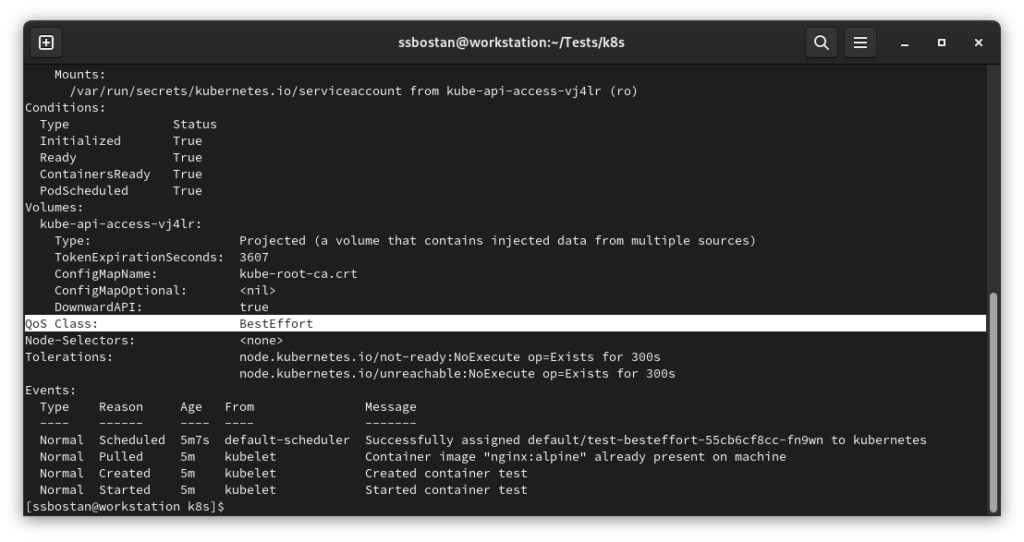

BestEffort QoS class:

When you don’t specify any resources for containers within a Pod, the Pod will be run in this QoS class. This class has the minimum priority in the cluster, and the Pods of this class are the first candidate to be evicted when the node is under pressure.

BestEffort means containers can use resources as much as they can, and because you didn’t provide any requests and limits, there is no guarantee for them to be alive as you expect. They can use node resources if any resource is available, and they are the first target for eviction if the node is faced with pressure. They make their best effort, and you need to pray and hope they will run as long as you expect.

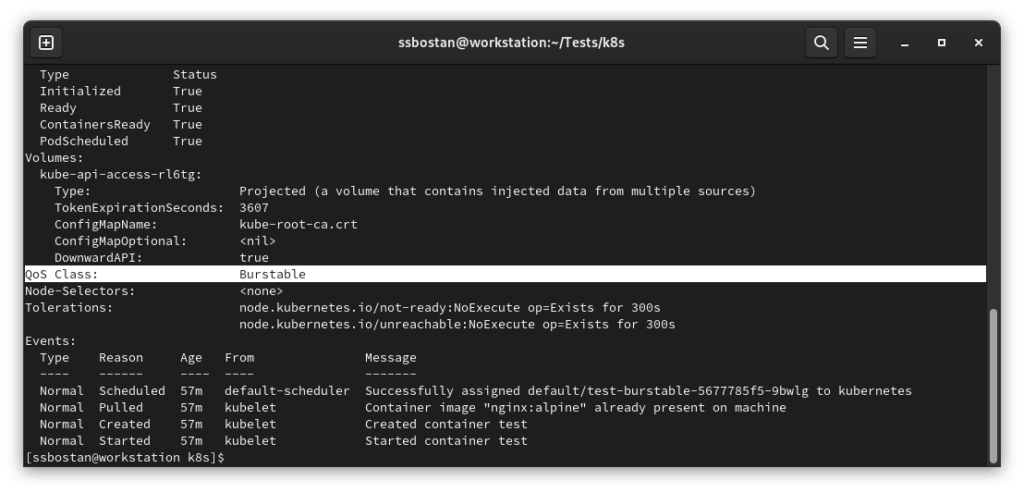

Burstable QoS class:

Burstable class is the one you provide requests and limits for all the containers in the Pod, but the amount of memory and CPU of requests is less than the limits. Kubernetes scheduler schedules Pods based on their resource requests and has nothing to do with limits. Resource limits get enforced when the Pod runs on the worker node, and if it tries to consume more than limits, Kubelet kills it. Still, there is another hidden fact about this type of QoS class! There is no guarantee that the worker node can provide resources up to Pod’s limits. Suppose Pod get scheduled on a worker node “based on requests”, and the worker node resources go under pressure due to “lack of available physical resource”, and the container tries to consume more resources than requests “as it expects to use up to limits”. In that case, Kubelet may kill containers of this QoS class if some Pods with Guaranteed QoS classes need to consume more resources in that node.

So you need to be cautious and know what exactly you are doing in cluster and worker node and how much resources you need now and in the future. Note that if you allocate inappropriate resources, you may go overutilization and BOOOM.

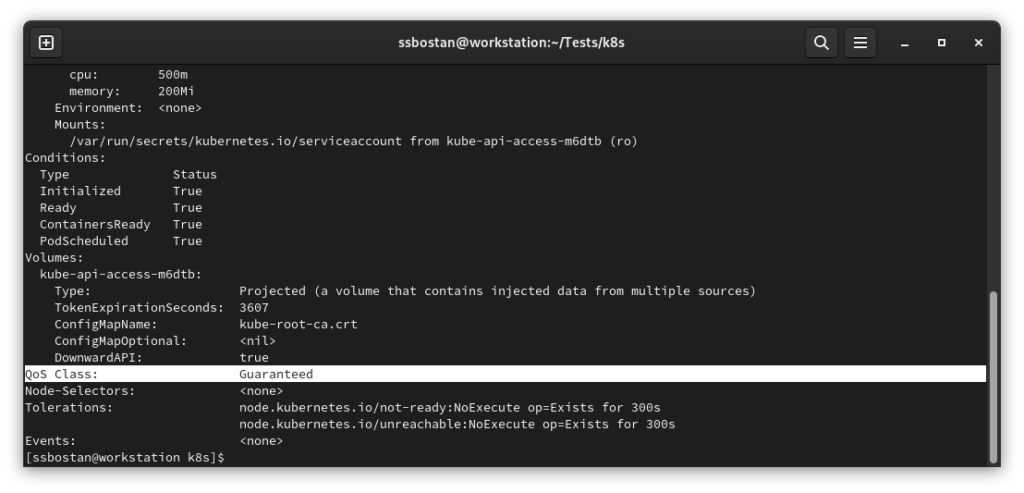

Guaranteed QoS class:

The best one for the app, bad one for your pocket. When you provide both requests and limits with the same value, your Pod will run in the Guaranteed QoS class. It means it will be the last candidate evicted from the node. You should be aware of two critical things. The first one is how many resources your Pod really needs, and the second one is how to spread your Pods across worker nodes to prevent node underutilization. Kubernetes scheduler schedules based on resource requests, and if you set requests and limits with the same values but less than Pod really needs, your Pod will be scheduled on the node, but it gets restarted with “Out Of Memory” kill status when it tries to consume more than limits. On the other hand, if you set more than what it needs, your node will go underutilization, and you should pay for what you do not use.

To run Pods with Guaranteed QoS class, create a proper spread topology to avoid going underutilized, and run fair stress tests like your real-world application traffic to find the right resource needed by the application to prevent application restarts.

Conclusion:

BestEffort has the minimum priority and is the first candidate evicted from the worker node. There is no guarantee that it will be run properly with adequate resources. It can get evicted anytime. Use it carefully and never use it for production workloads.

Burstable has the second priority, and in the case of node pressure, it will be evicted after BestEffort containers. Setting the wrong amount of requests/limits can cause overutilization and significantly impact all of the running Pods on the node.

Guaranteed has the last priority and will get evicted after Burstable pods. Setting an inappropriate amount of resources causes Pod getting into an infinity restart loop, node underutilization, or, if you set more than what you have, in a Pending state.

Keep your eyes open for the following articles about spread topologies and priority classes to learn how you should deploy production-grade workloads in Kubernetes clusters.

If you like this series of articles, please share them and write your thoughts as comments here. Your feedback encourages me to complete this massively planned program.

Follow my LinkedIn https://www.linkedin.com/in/ssbostan

Follow Kubedemy LinkedIn https://www.linkedin.com/company/kubedemy

Follow Kubedemy Telegram https://telegram.me/kubedemy