AWS EKS – Part 35 – Kubernetes Storage using EFS CSI Driver

AWS EFS “Elastic File System” is a simple, scalable, and highly available NFS-based filesystem designed to be mounted concurrently by multiple EC2 instances and on-premises servers. It can also be integrated with AWS EKS to provide persistent storage for stateful applications. In this lesson, you will learn how to create EFS, setup the EFS CSI Driver, and use EFS within the Kubernetes cluster to have persistent storage.

Follow our social media:

https://linkedin.com/in/ssbostan

https://linkedin.com/company/kubedemy

Register for the FREE EKS Tutorial:

If you want to access the course materials, register from the following link:

Register for the FREE AWS EKS Black Belt Course

What is AWS EFS?

AWS EFS is a scalable, fully managed file storage service for use with AWS cloud services and on-premises resources. It offers automatic scaling, high availability, and durability by replicating data across multiple Availability Zones. EFS supports the NFS protocol, provides encryption, and integrates seamlessly with services like AWS EC2, AWS Lambda and AWS EKS. When used with EKS, EFS allows Kubernetes Pods to persist and share data, enhancing data durability and accessibility for stateful applications.

How does EFS fit in Kubernetes?

The EFS CSI Driver adds static and dynamic provisioning support within the cluster. In dynamic provisioning, the driver is responsible for creating access points for each Kubernetes PersistentVolume resource within the same EFS storage, which means the driver doesn’t create the EFS itself, and in both static and dynamic provisioning, you must setup the EFS storage manually. The EFS storage supports ReadWriteMany to allow the storage to be mounted to multiple worker nodes simultaneously.

EFS + EKS Considerations and Limitations:

- EFS dynamic provisioning doesn’t work with Fargate instances, so static provisioning must be used. The driver is available by default in Fargate instances.

- EFS doesn’t support storage pre-provisioning, and it scales automatically on demand. This means the storage size within the PVC definition does not matter, and Pods can consume as much as they want without any limitation.

- EFS performance is quite different from those of local storage and EBS, and it’s not a proper solution for applications that need high-performance storage.

AWS EFS in EKS setup procedure:

- Create the EFS storage in your AWS account.

- Setup IAM Role to allow EKS to access the EFS storage.

- Deploy and configure the EFS CSI Driver.

- Deploy test application and test EFS storage.

Step 1 – Create EFS for EKS Persistent Storage:

To create an EFS filesystem, use the following command. You can turn on/off its encryption and backup features, change the encryption key using your own KMS key, and change its performance and throughput. Cost varies depending on the configuration.

aws efs create-file-system \

--performance-mode generalPurpose \

--encrypted \

--throughput-mode elastic \

--backup \

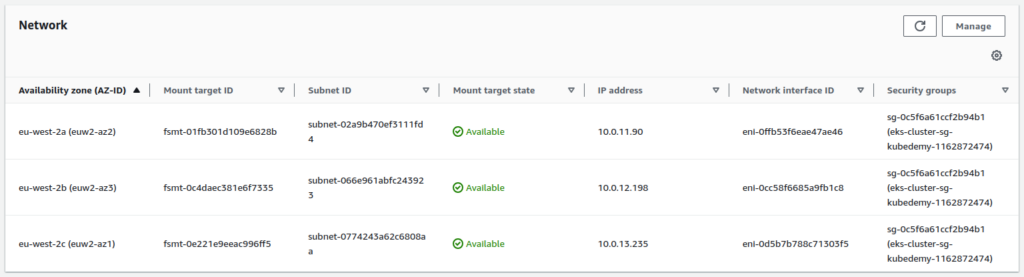

--tags Key=owner,Value=kubedemyAfter creating the filesystem, we must create a mount target in each AZ where we want to mount it. For example, if you have an EKS cluster with worker nodes in three AZs, you must create three mount targets to use the EFS within all of them.

aws efs create-mount-target \

--file-system-id fs-00bb39dbc10d9f255 \

--subnet-id subnet-02a9b470ef3111fd4 \

--security-groups sg-0c5f6a61ccf2b94b1

aws efs create-mount-target \

--file-system-id fs-00bb39dbc10d9f255 \

--subnet-id subnet-066e961abfc243923 \

--security-groups sg-0c5f6a61ccf2b94b1

aws efs create-mount-target \

--file-system-id fs-00bb39dbc10d9f255 \

--subnet-id subnet-0774243a62c6808aa \

--security-groups sg-0c5f6a61ccf2b94b1You can use private subnets to create mount targets.

Note: Use the same security group as the cluster.

Step 2 – Setup IAM Role for EFS CSI Driver:

The IAM role configuration can be different depending on what you have and what you need. AWS provides a managed policy for the EFS CSI Driver, which can access all EFS file systems within your account. You can use that or create a custom policy with the same permissions but limited to a specific EFS file system. Depending on the cluster security setup, the policy can be attached to the Node IAM Role or an IRSA role for the EFS CSI Driver. In this lesson, I will attach the managed policy to the node IAM role. To use IRSA instead, read the following article explaining how to set it up.

AWS EKS – Part 13 – Setup IAM Roles for Service Accounts (IRSA)

aws iam attach-role-policy \

--policy-arn arn:aws:iam::aws:policy/service-role/AmazonEFSCSIDriverPolicy \

--role-name Kubedemy_EKS_Managed_Nodegroup_RoleStep 3 – Deploy and Configure EFS CSI Driver:

The EFS CSI Driver can be installed using both Helm and EKS Addons. As we installed the EBS CSI Driver using the EKS Addons in the previous lesson, I want to install this driver using Helm to show you how you can use Helm to install the EKS addons.

helm repo add aws-efs-csi-driver https://kubernetes-sigs.github.io/aws-efs-csi-driver

cat <<EOF > efs-csi-driver.yaml

controller:

affinity:

nodeAffinity:

preferredDuringSchedulingIgnoredDuringExecution:

- weight: 100

preference:

matchExpressions:

- key: node.kubernetes.io/scope

operator: In

values:

- system

storageClasses:

- name: efs

parameters:

provisioningMode: efs-ap

fileSystemId: fs-00bb39dbc10d9f255

directoryPerms: "700"

gidRangeStart: "1000"

gidRangeEnd: "2000"

basePath: "/eks-efs-storage"

reclaimPolicy: Delete

volumeBindingMode: Immediate

EOF

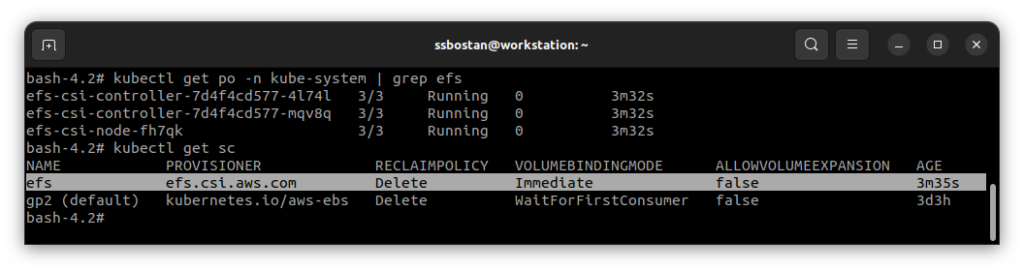

helm install efs-csi-driver aws-efs-csi-driver/aws-efs-csi-driver --namespace kube-system -f efs-csi-driver.yamlNote: Remember to change fileSystemId to your own.

After installing the driver, you must be able to see its Pods and the StorageClass.

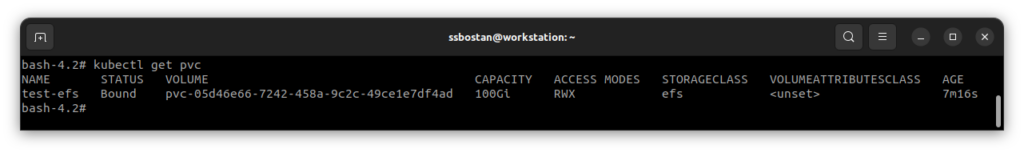

Step 4 – Use EFS in ReadWriteMany mode:

Use the following manifest to create a Kubernetes PVC backed by the EFS filesystem. As mentioned above, EFS supports ReadWriteMany mode, and the storage size does not matter. Creating a PVC using the EFS StorageClass will create a new Access Point in the EFS file system, which means all PVCs are created in the same file system.

cat <<EOF | kubectl apply -f -

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: test-efs

spec:

storageClassName: efs

accessModes:

- ReadWriteMany

resources:

requests:

storage: 100Gi

EOF

Now, create a deployment with more than one replica and simultaneously mount the volume for them. It will work without issue; all the Pods can access that storage.

cat <<"EOF" | kubectl apply -f -

apiVersion: apps/v1

kind: Deployment

metadata:

name: test-efs

spec:

replicas: 3

selector:

matchLabels:

app: test-efs

template:

metadata:

labels:

app: test-efs

spec:

containers:

- name: alpine

image: alpine:latest

command: ["sh", "-c", "echo kubedemy > /data/$(POD_NAME) && sleep infinity"]

env:

- name: POD_NAME

valueFrom:

fieldRef:

fieldPath: metadata.name

volumeMounts:

- name: test-efs

mountPath: /data

volumes:

- name: test-efs

persistentVolumeClaim:

claimName: test-efs

EOFAll Pods in the above Deployment will create a file within the shared storage.

Conclusion:

EFS is a highly available filesystem that guarantees 99.9% uptime. It allows you to deploy stateful applications within Kubernetes clusters. Although its performance and throughput are not comparable with local storage and EBS volumes, it can serve your needs without worrying about deploying third-party storage solutions. In future lessons, you will learn how to integrate S3 with EKS for higher availability and resilience.

If you like this series of articles, please share them and write your thoughts as comments here. Your feedback encourages me to complete this massively planned program. Just share them and provide feedback. I’ll make you an AWS EKS black belt.

Follow my LinkedIn https://linkedin.com/in/ssbostan

Follow Kubedemy’s LinkedIn https://linkedin.com/company/kubedemy

Follow Kubedemy’s Telegram https://telegram.me/kubedemy